Introduction

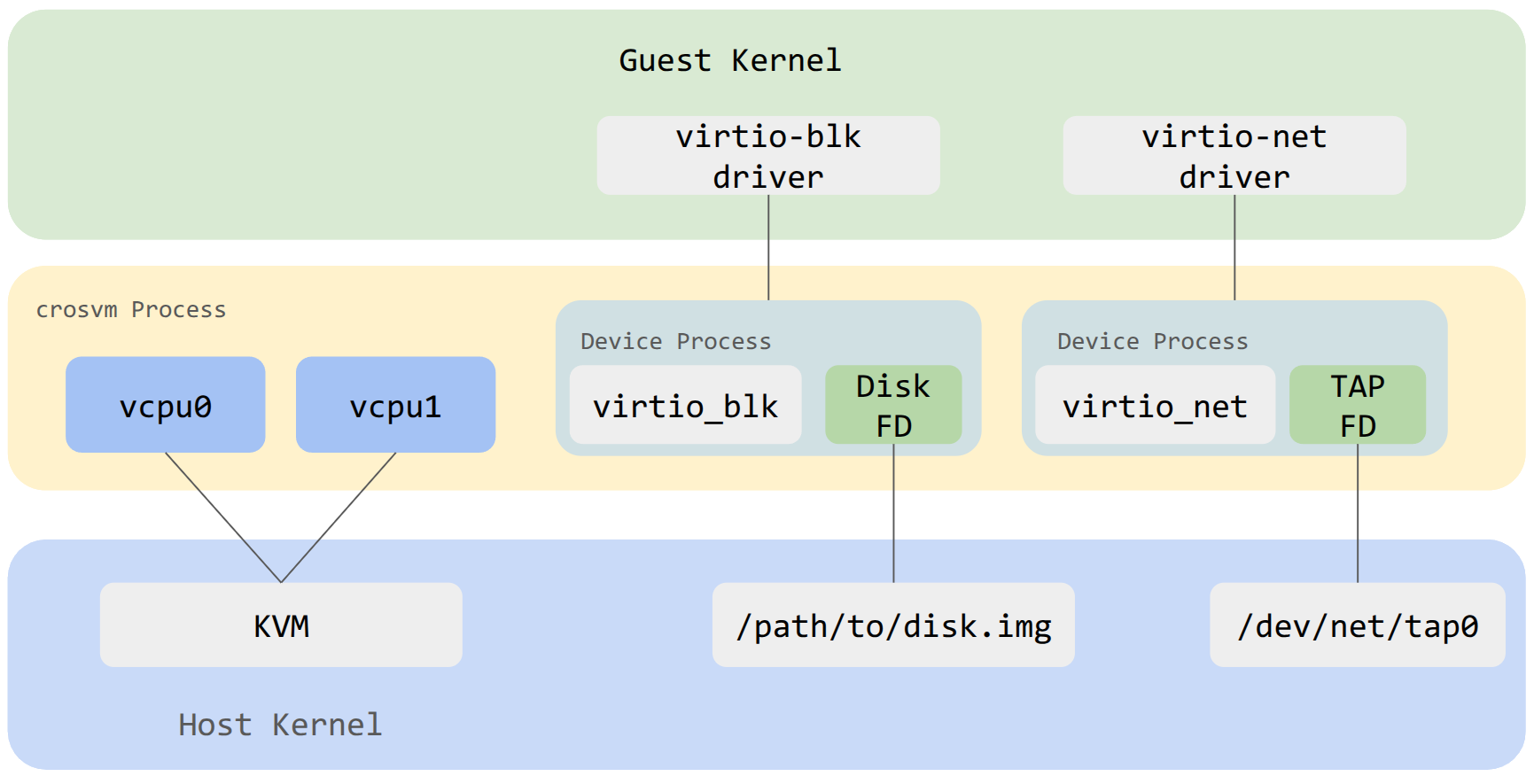

The crosvm project is a hosted (a.k.a. type-2) virtual machine monitor similar to QEMU-KVM or VirtualBox.

It is a VMM that can run untrusted operating systems in a sandboxed environment. crosvm focuses on safety first and foremost, both in its language of choice (Rust) and through its runtime sandbox system. Each virtual device (disk, network, etc) is by default executed inside a minijail sandbox, isolated from the rest. In case of an exploit or vulnerability, this sandbox prevents an attacker from escaping and doing harmful things to the host operating system. On top of that, crosvm also relies on a syscall security policy that prevents unwanted system calls from being executed by a compromised device.

Initially it was intended to be used with KVM and Linux, but it now also supports other types of platforms.

To run crosvm all that is needed is an operating system image (a root file system plus a kernel) and crosvm will run it through the platform's hypervisor. See the example usage page to get started or visit the building crosvm section to compile your own from source.

- Announcements

- Developer Mailing List

- #crosvm on matrix.org

- Source code

- GitHub mirror

- API documentation, useful for searching API.

- Files for this book are under /docs/.

- Public issue tracker

- For Googlers: See go/crosvm#filing-bugs.

Building Crosvm

This chapter describes how to build crosvm on each host OS:

Pre-requisite: install Rust.

If you are targeting ChromeOS, please see Integration.

Building Crosvm on Linux

This page describes how to build and develop crosvm on linux. If you are targeting ChromeOS, please see Integration

Checking out

Obtain the source code via git clone.

git clone https://chromium.googlesource.com/crosvm/crosvm

Setting up the development environment

Crosvm uses submodules to manage external dependencies. Initialize them via:

git submodule update --init

It is recommended to enable automatic recursive operations to keep the submodules in sync with the

main repository (but do not push them, as that can conflict with repo):

git config submodule.recurse true

git config push.recurseSubmodules no

Crosvm development best works on Debian derivatives. We provide a script to install the necessary packages on Debian, Ubuntu or gLinux:

./tools/setup

For other systems, please see below for instructions on Using the development container.

Using the development container

We provide a Debian container with the required packages installed. With Podman or Docker installed, it can be started with:

NOTE: to use podman, you will need to install passt.

sudo apt install passt

KVM access on Linux systems is typically managed via supplemental user groups. To access KVM from inside the development container on such systems podman has to use 'crun' runtime. This runtime supports preserving user's supplemental groups when starting a container.

sudo apt install crun

./tools/dev_container

The container image is big and may take a while to download when first used. Once started, you can follow all instructions in this document within the container shell.

Instead of using the interactive shell, commands to execute can be provided directly:

./tools/dev_container cargo build

Note: The container and build artifacts are preserved between calls to ./tools/dev_container. If

you wish to start fresh, use the --clean flag.

Building a binary

If you simply want to try crosvm, run cargo build. Then the executable is generated at

./target/debug/crosvm. In case you are using development container, the executable will be inside

the dev container at /scratch/cargo_target/debug/crosvm.

Now you can move to Example Usage.

If you want to enable additional features, use the --features

flag. (e.g. cargo build --features=gdb)

Development

Running all tests

Crosvm's integration tests have special requirements for execution (see Testing), so we use a special test runner. By default it will only execute unit tests:

./tools/run_tests

To execute integration tests as well, you need to specify a device-under-test (DUT). The most reliable option is to use the built-in VM for testing:

./tools/run_tests --dut=vm

However, you can also use your local host directly. Your mileage may vary depending on your host kernel version and permissions.

./tools/run_tests --dut=host

Since we have some architecture-dependent code, we also have the option of running unit tests for aarch64, riscv64, and windows (mingw64). These will use an emulator to execute (QEMU or wine):

./tools/run_tests --platform=aarch64

./tools/run_tests --platform=riscv64

./tools/run_tests --platform=mingw64

When working on a machine that does not support cross-compilation (e.g. gLinux), you can use the dev container to build and run the tests.

./tools/dev_container ./tools/run_tests --platform=aarch64

Presubmit checks

To verify changes before submitting, use the presubmit script. To ensure the toolchains for all

platforms are available, it is recommended to run it inside the dev container.

./tools/dev_container ./tools/presubmit

This will run clippy, formatters and runs all tests for all platforms. The same checks will also be

run by our CI system before changes are merged into main.

See tools/presumit -h for details on various options for selecting which checks should be run to

trade off speed and accuracy.

Cross-compilation

Crosvm is built and tested on x86, aarch64, and riscv64. Your system needs some setup work to be able to cross-compile for other architectures, hence it is recommended to use the development container, which will have everything configured.

Note: Cross-compilation is not supported on gLinux. Please use the development container.

Enable foreign architectures

Your host needs to be set up to allow installation of foreign architecture packages.

On Debian this is as easy as:

sudo dpkg --add-architecture arm64

sudo dpkg --add-architecture riscv64

sudo apt update

On ubuntu this is a little harder and needs some manual modifications of APT sources.

With that enabled, the following scripts will install the needed packages:

./tools/setup-aarch64

./tools/setup-riscv64

Configuring wine and mingw64

Crosvm is also compiled and tested on windows. Some limited testing can be done with mingw64 and wine on linux machines. Use the provided setup script to install the needed dependencies.

./tools/setup-mingw64

Configure cargo for cross-compilation

Cargo requries additional configuration to support cross-compilation. You can copy the provided example config to your cargo configuration:

cat .cargo/config.debian.toml >> ${CARGO_HOME:-~/.cargo}/config.toml

Note

In case of cross-compilation, crosvm executable would be at ./target/debug/<target>/crosvm. If

cross-compiling inside development container, the executable would be inside dev container at

/scratch/cargo_target/<target>/debug/crosvm.

e.g For aarch64, target will be aarch64-unknown-linux-gnu and you can build using

cargo build --target aarch64-unknown-linux-gnu

Known issues

- Devices can't be jailed if

/var/emptydoesn't exist.sudo mkdir -p /var/emptyto work around this for now. - You need read/write permissions for

/dev/kvmto run tests or other crosvm instances. Usually it's owned by thekvmgroup, sosudo usermod -a -G kvm $USERand then log out and back in again to fix this. - Some other features (networking) require

CAP_NET_ADMINso those usually need to be run as root.

Building Crosvm on Windows

This page describes how to build and develop crosvm on windows. If you are targeting linux, please see Building Crosvm on linux

NOTE: Following instruction assume that

- git is installed and

gitcommand exists in yourEnv:PATH - the commands are run in powershell

Create base directory - C:\src

mkdir C:\src

cd C:\src

Checking out

Obtain the source code via git clone.

git clone https://chromium.googlesource.com/crosvm/crosvm

Setting up the development environment

Crosvm uses submodules to manage external dependencies. Initialize them via:

cd crosvm

git submodule update --init

It is recommended to enable automatic recursive operations to keep the submodules in sync with the

main repository (But do not push them, as that can conflict with repo):

git config submodule.recurse true

git config push.recurseSubmodules no

install-deps.ps1 install the necessary tools needed to build crosvm on windows. In addition to

installing the scripts, the script also sets up environment variables.

The below script may prompt you to install msvc toolchain via Visual Studio community edition.

Set-ExecutionPolicy Unrestricted -Scope CurrentUser

./tools/install-deps.ps1

NOTE: Above step sets up enviroment variables. You may need to either start a new powershell session or reload the environemnt variables,

Build crosvm

cargo build --features all-msvc64,whpx

Running Crosvm

This chapter includes instructions on how to run crosvm.

- Example Usage: Functioning examples to get started.

- Advanced Usage: Details on how to enable and configure features and devices of crosvm.

- Custom Kernel / Rootfs: Instructions on how to build a kernel and rootfs for crosvm.

- Options: How to specify command-line options.

- System Requirements: Host and guest requirements for running crosvm

- Features: Feature flags available when building crosvm

Example Usage

This section will explain how to use a prebuilt Ubuntu image as the guest OS. If you want to prepare a kernel and rootfs by yourself, please see Custom Kernel / Rootfs.

The example code for this guide is available in tools/examples

Run a simple Guest OS (using virt-builder)

To run a VM with crosvm, we need two things: A kernel binary and a rootfs. You can build those yourself or use prebuilt cloud/vm images that some linux distributions provide.

Preparing the guest OS image

One of the more convenient ways to customize these VM images is to use virt-builder from the

libguestfs-tools package.

# Build a simple ubuntu image and create a user with no password.

virt-builder ubuntu-20.04 \

--run-command "useradd -m -g sudo -p '' $USER ; chage -d 0 $USER" \

-o ./rootfs

# Packages can be pre-installed to the image using

# --install PACKAGE_NAME

# Ex: virt-builder ubuntu-20.04 ... --install openssh-server,ncat

# In this example, the ubuntu image will come pre-installed with OpenSSH-server and with Ncat.

Extract the Kernel (And initrd)

Crosvm directly runs the kernel instead of using the bootloader. So we need to extract the kernel binary from the image. virt-builder has a tool for that:

virt-builder --get-kernel ./rootfs -o .

The kernel binary is going to be saved in the same directory.

Note: Most distributions use an init ramdisk, which is extracted at the same time and needs to be passed to crosvm as well.

Add the user to the kvm group

To run crosvm without sudo, the user should be added to the kvm group in order to obtain the

access to the /dev/kvm file. If the user is already in the kvm group, skip this part. Otherwise,

execute the command below.

sudo adduser "$USER" kvm

You can check if the user is in the kvm group or not with the following command:

groups | grep kvm

After executing the adduser command above, please logout and log back in to reflect the kvm group.

Launch the VM

With all the files in place, crosvm can be run:

# Create `/var/empty` where crosvm can do chroot for jailing each virtio device.

# Devices can't be jailed if /var/empty doesn't exist.

# You can change this directory(/var/empty) by setting the environment variable: DEFAULT_PIVOT_ROOT

sudo mkdir -p /var/empty

# Run crosvm.

# The rootfs is an image of a partitioned hard drive, so we need to tell

# the kernel which partition to use (vda5 in case of ubuntu-20.04).

cargo run --no-default-features -- run \

--rwdisk ./rootfs \

--initrd ./initrd.img-* \

-p "root=/dev/vda5" \

./vmlinuz-*

The full source for this example can be executed directly:

./tools/examples/example_simple

The login username will be the username on the host, and it will prompt to decide the password on the first login in the VM.

Add Networking Support

Networking support is easiest set up with a TAP device on the host, which can be done with:

./tools/examples/setup_network

The script will create a TAP device called crosvm_tap and sets up routing. For details, see the

instructions for network devices.

With the crosvm_tap in place we can use it when running crosvm:

# Use the previously configured crosvm_tap device for networking.

cargo run -- run \

--rwdisk ./rootfs \

--initrd ./initrd.img-* \

--net tap-name=crosvm_tap \

-p "root=/dev/vda5" \

./vmlinuz-*

To use the network device in the guest, we need to assign it a static IP address. In our example guest this can be done via a netplan config:

First, create a guest directory and the netplan config:

mkdir guest/

touch guest/01-netcfg.yaml

Then edit guest/01-netcfg.yaml and add the following contents:

# Configure network with static IP 192.168.10.2

network:

version: 2

renderer: networkd

ethernets:

enp0s4:

addresses: [192.168.10.2/24]

nameservers:

addresses: [8.8.8.8]

gateway4: 192.168.10.1

The netplan config can be installed when building the VM image:

builder_args=(

# Create user with no password.

--run-command "useradd -m -g sudo -p '' $USER ; chage -d 0 $USER"

# Configure network via netplan config in 01-netcfg.yaml

--hostname crosvm-test

# $SRC=/path/to/crosvm

--copy-in "$SRC/guest/01-netcfg.yaml:/etc/netplan/"

# Install sshd.

--install openssh-server

-o rootfs

)

# Inject authorized key for the user.

# If the SSH RSA public key file is missing, you will need to login to

# the VM the first time and change passwords before you can login via SSH.

ID_RSA_PUB="$HOME/.ssh/id_rsa.pub"

if [ -r "${ID_RSA_PUB}" ]; then

builder_args+=("--ssh-inject" "${USER}:file:${ID_RSA_PUB}")

fi

virt-builder ubuntu-20.04 "${builder_args[@]}"

This also allows us to use SSH to access the VM. The script above will install your

~/.ssh/id_rsa.pub into the VM, so you'll be able to SSH from the host to the guest with no

password:

ssh 192.168.10.2

WARNING: If you are on a gLinux machine, then you will need to disable Corp SSH Helper:

ssh -oProxyCommand=none 192.168.10.2

The full source for this example can be executed directly:

./tools/examples/example_network

Add GUI support

First you'll want to add some desktop environment to the VM image:

builder_args=(

# Create user with no password.

--run-command "useradd -m -g sudo -p '' $USER ; chage -d 0 $USER"

# Configure network. See ./example_network

--hostname crosvm-test

--copy-in "$SRC/guest/01-netcfg.yaml:/etc/netplan/"

# Install a desktop environment to launch

--install xfce4

-o rootfs

)

virt-builder ubuntu-20.04 "${builder_args[@]}"

Then you can use the --gpu argument to specify how gpu output of the VM should be handled. In this

example we are using the virglrenderer backend and output into an X11 window on the host.

# Enable the GPU and keyboard/mouse input. Since this will be a much heavier

# system to run we also need to increase the cpu/memory given to the VM.

# Note: GDM does not allow you to set your password on first login, you have to

# log in on the command line first to set a password.

cargo run --features=gpu,x,virgl_renderer -- run \

--cpus 4 \

--mem 4096 \

--gpu backend=virglrenderer,width=1920,height=1080 \

--display-window-keyboard \

--display-window-mouse \

--net tap-name=crosvm_tap \

--rwdisk ./rootfs \

--initrd ./initrd.img-* \

-p "root=/dev/vda5" \

./vmlinuz-*

The full source for this example can be executed directly (Note, you may want to run setup_networking first):

./tools/examples/example_desktop

Advanced Usage

To see the usage information for your version of crosvm, run crosvm or crosvm run --help.

Specify log levels

To change the log levels printed while running crosvm:

crosvm --log-level=LEVEL run

Ex:

crosvm --log-level=debug run

To change the log levels printed for a specific module:

crosvm --log-level=devices::usb::xhci=LEVEL run

Those can be combined to print different log levels for modules and for crosvm:

crosvm --log-level=devices::usb::xhci=LEVEL1,LEVEL2 run

Where LEVEL1 will be applied to the module "devices::usb::xhci" and LEVEL2 will be applied to the rest of crosvm.

Available LEVELs: off, error, warn, info (default), debug, trace (only available in debug builds).

Note: Logs will print all logs of the same or lower level. Ex: info will print error + warn + info.

Boot a Kernel

To run a very basic VM with just a kernel and default devices:

crosvm run "${KERNEL_PATH}"

The compressed kernel image, also known as bzImage, can be found in your kernel build directory in

the case of x86 at arch/x86/boot/bzImage.

Rootfs

With a disk image

In most cases, you will want to give the VM a virtual block device to use as a root file system:

crosvm run -b "${ROOT_IMAGE},root,ro" "${KERNEL_PATH}"

The root image must be a path to a disk image formatted in a way that the kernel can read. Typically

this is a squashfs image made with mksquashfs or an ext4 image made with mkfs.ext4. By

specifying the root flag, the kernel is automatically told to use that image as the root, and

therefore it can only be given once. The ro flag also makes the disk image read-only for the

guest. More disks images can be given with -b or --block if needed.

To run crosvm with a writable rootfs, just remove the ro flag from the command-line above.

WARNING: Writable disks are at risk of corruption by a malicious or malfunctioning guest OS.

Without the root flag, mounting a disk image as the root filesystem requires to pass the

corresponding kernel argument manually using the -p option:

crosvm run --block "${ROOT_IMAGE}" -p "root=/dev/vda" bzImage

NOTE: If more disks arguments are added prior to the desired rootfs image, the

root=/dev/vdamust be adjusted to the appropriate letter.

With virtiofs

Linux kernel 5.4+ is required for using virtiofs. This is convenient for testing. Note kernels before 5.15 require the file system to be named "mtd*" or "ubi*". See discussions and a patch for the details.

crosvm run --shared-dir "/:mtdfake:type=fs:cache=always" \

-p "rootfstype=virtiofs root=mtdfake" bzImage

Device emulation

Crosvm supports several emulated devices and 15+ types of virtio devices. See "Device" chapter for the details.

Control Socket

If the control socket was enabled with -s, the main process can be controlled while crosvm is

running. To tell crosvm to stop and exit, for example:

NOTE: If the socket path given is for a directory, a socket name underneath that path will be generated based on crosvm's PID.

crosvm run -s /run/crosvm.sock ${USUAL_CROSVM_ARGS}

<in another shell>

crosvm stop /run/crosvm.sock

WARNING: The guest OS will not be notified or gracefully shutdown.

This will cause the original crosvm process to exit in an orderly fashion, allowing it to clean up any OS resources that might have stuck around if crosvm were terminated early.

Multiprocess Mode

By default crosvm runs in multiprocess mode. Each device that supports running inside of a sandbox

will run in a jailed child process of crosvm. The sandbox can be disabled for testing with the

--disable-sandbox option.

GDB Support

crosvm supports GDB Remote Serial Protocol to allow developers to debug guest kernel via GDB (x86_64 or AArch64 only).

You can enable the feature by --gdb flag:

# Use uncompressed vmlinux

crosvm run --gdb <port> ${USUAL_CROSVM_ARGS} vmlinux

Then, you can start GDB in another shell.

gdb vmlinux

(gdb) target remote :<port>

(gdb) hbreak start_kernel

(gdb) c

<start booting in the other shell>

For general techniques for debugging the Linux kernel via GDB, see this kernel documentation.

Defaults

The following are crosvm's default arguments and how to override them.

- 256MB of memory (set with

-m) - 1 virtual CPU (set with

-c) - no block devices (set with

-b,--block) - no network device (set with

--net) - only the kernel arguments necessary to run with the supported devices (add more with

-p) - run in multiprocess mode (run in single process mode with

--disable-sandbox) - no control socket (set with

-s)

Exit code

Crosvm will exit with a non-zero exit code on failure.

See CommandStatus for meaning of the major exit codes.

Hypervisor

The default hypervisor back can be overriden using --hypervisor=<backend>.

The available backends are:

- On Linux: "kvm"

- On Windows: "whpx", "haxm", "ghaxm", "gvm"

See the "Hypervisors" chapter for more information.

Custom Kernel / Rootfs

This document explains how to build a custom kernel and use debootstrap to build a rootfs for running crosvm.

For an easier way to get started with prebuilt images, see Example Usage

Build a kernel

The linux kernel in chromiumos comes preconfigured for running in a crosvm guest and is the easiest to build. You can use any mainline kernel though as long as it's configured for para-virtualized (virtio) devices

If you are using the chroot for ChromiumOS development, you already have the kernel source. Otherwise, you can clone it:

git clone --depth 1 -b chromeos-6.6 https://chromium.googlesource.com/chromiumos/third_party/kernel

Either way that you get the kernel, the next steps are to configure and build the bzImage:

cd kernel

CHROMEOS_KERNEL_FAMILY=termina ./chromeos/scripts/prepareconfig container-vm-x86_64

make olddefconfig

make -j$(nproc) bzImage

This kernel does not build any modules, nor does it support loading them, so there is no need to worry about an initramfs, although they are supported in crosvm.

Build a rootfs disk

This stage enjoys the most flexibility. There aren't any special requirements for a rootfs in

crosvm, but you will at a minimum need an init binary. This could even be /bin/bash if that is

enough for your purposes. To get you started, a Debian rootfs can be created with debootstrap.

Make sure to define $CHROOT_PATH.

truncate -s 20G debian.ext4

mkfs.ext4 debian.ext4

mkdir -p "${CHROOT_PATH}"

sudo mount debian.ext4 "${CHROOT_PATH}"

sudo debootstrap stable "${CHROOT_PATH}" http://deb.debian.org/debian/

sudo chroot "${CHROOT_PATH}"

passwd

echo "tmpfs /tmp tmpfs defaults 0 0" >> /etc/fstab

echo "tmpfs /var/log tmpfs defaults 0 0" >> /etc/fstab

echo "tmpfs /root tmpfs defaults 0 0" >> /etc/fstab

echo "sysfs /sys sysfs defaults 0 0" >> /etc/fstab

echo "proc /proc proc defaults 0 0" >> /etc/fstab

exit

sudo umount "${CHROOT_PATH}"

Note: If you run crosvm on a testing device (e.g. Chromebook in Developer mode), another option is to share the host's rootfs with the guest via virtiofs. See the virtiofs usage.

You can simply create a disk image as follows:

fallocate --length 4G disk.img

mkfs.ext4 ./disk.img

Command line options

Command-line options generally take a set of key-value pairs separated by the comma (,) character.

The acceptable key-values for each option can be obtained by passing the --help option to a crosvm

command:

crosvm run --help

...

-b, --block parameters for setting up a block device.

Valid keys:

path=PATH - Path to the disk image. Can be specified

without the key as the first argument.

ro=BOOL - Whether the block should be read-only.

(default: false)

root=BOOL - Whether the block device should be mounted

as the root filesystem. This will add the required

parameters to the kernel command-line. Can only be

specified once. (default: false)

sparse=BOOL - Indicates whether the disk should support

the discard operation. (default: true)

block-size=BYTES - Set the reported block size of the

disk. (default: 512)

id=STRING - Set the block device identifier to an ASCII

string, up to 20 characters. (default: no ID)

direct=BOOL - Use O_DIRECT mode to bypass page cache.

(default: false)

...

From this help message, we see that the --block or -b option accepts the path, ro, root,

sparse, block-size, id, and direct keys. Keys which default value is mentioned are optional,

which means only the path key must always be specified.

One example invocation of the --block option could be:

--block path=/path/to/bzImage,root=true,block-size=4096

Keys taking a boolean parameters can be enabled by specifying their name witout any value, so the previous option can also be written as

--block path=/path/to/bzImage,root,block-size=4096

Also, the name of the first key can be entirely omitted, which further simplifies our option as:

--block /path/to/bzImage,root,block-size=4096

System Requirements

Linux

A Linux 4.14 or newer kernel with KVM support (check for /dev/kvm) is required to run crosvm. In

order to run certain devices, there are additional system requirements:

virtio-wayland- A Wayland compositor.vsock- Host Linux kernel with vhost-vsock support.multiprocess- Host Linux kernel with seccomp-bpf and Linux namespacing support.virtio-net- Host Linux kernel with TUN/TAP support (check for/dev/net/tun) and running withCAP_NET_ADMINprivileges.

Features

Feature flags of the crosvm crate control which features are included in the binary. These

features can be enabled using Cargo's --features flag. Some features are enabled by default unless

the Cargo --no-default-features flag is specified. See the crosvm crate documentation for

details.

Programmatic Interaction Using the crosvm_control Library

Usage

crosvm_control

provides a programmatic way to interface with crosvm as a substitute to the CLI.

The library itself is written in Rust, but a C/C++ compatible header (crosvm_control.h) is

generated during the crosvm build and emitted to the Rust OUT_DIR.

(See the build.rs

script for more information).

The best practice for using crosvm_control from your project is to exclusively use the

crosvm_control.h generated by the crosvm build. This ensures that there will never be a runtime

version mismatch between your project and crosvm. Additionally, this will allow for build-time

checks against the crosvm API.

During your project's build step, when building the crosvm dependency, the emitted

crosvm_control.h should be installed to your project's include dir - overwriting the old version

if present.

Changes

As crosvm_control is a externally facing interface to crosvm, great care must be taken when

updating the API surface. Any breaking change to a crosvm_control entrypoint must be handled the

same way as a breaking change to the crosvm CLI.

As a general rule, additive changes (such as adding new fields to the end of a struct, or adding a

new API) are fine and should be integrated correctly with downstream projects so long as those

projects follow the usage best practices. Changes that change the signature of any existing

crosvm_control function will cause problems downstream and should be considered a breaking change.

(ChromeOS Developers Only)

For ChromeOS, it is possible to integrate a breaking change from upstream crosvm, but it should be avoided if at all possible. See here for more information.

Testing

Crosvm runs on a variety of platforms with a significant amount of platform-specific code. Testing on all the supported platforms is crucial to keep crosvm healthy.

Types of tests

Unit Tests

Unit tests are your standard rust tests embedded with the rest of the code in src/ and wrapped in

a #[cfg(test)] attribute.

Unit tests cannot make any guarantees on the runtime environment. Avoid doing the following in unit tests:

- Avoid kernel features such as io_uring or userfaultfd, which may not be available on all kernels.

- Avoid functionality that requires privileges (e.g. CAP_NET_ADMIN)

- Avoid spawning threads or processes

- Avoid accessing kernel devices

- Avoid global state in unit tests

This allows us to execute unit tests for any platform using emulators such as qemu-user-static or wine64.

File Access in Unit Tests

Some unit tests may need to access extra data files. The files should be accessed at build time

using include_str!() macro, rather than at run time. The file is located relative to the current

file (similarly to how modules are found). The contents of the file can be used directly in the test

or at runtime the test can write this data to a temporary file. This approach is crucial because

certain test environment may require to run the test binaries directly without access to the source

code. Additionally, it ensures the test binary can be run manually within a debugger like GDB.

These approaches ensure that units tests be able to find the correct paths in various build & execution environment.

Example:

#![allow(unused)] fn main() { #[test] fn test_my_config() { let temp_file = TempDir::new().unwrap(); let path = temp_file.path().join("my_config.cfg"); let test_config = include_str!("../../../data/config/my_config.cfg"); fs::write(&path, test_config).expect("Unable to write test file"); let config_file = File::open(path).expect("Failed to open config file"); // ... rest of your test ... } }

Documentation tests

Rust's documentation tests can be used to provide examples as part of the documentation that is verified by CI.

Documentation tests are slow and not run as part of the usual workflows, but can be run locally with:

./tools/presubmit doc_tests

Integration tests

Cargo has native support for

integration testing.

Integration tests are written just like unit tests, but live in a separate directory at tests/.

Integration tests guarantee that the test has privileged access to the test environment. They are only executed when a device-under-test (DUT) is specified when running tests:

./tools/run_tests --dut=vm|host

End To End (E2E) tests

End to end tests live in the e2e_tests crate. The crate provides a framework to boot a guest with

crosvm and execut commands in the guest to validate functionality at a high level.

E2E tests are executed just like integration tests. By giving nextest's filter expressions, you can run a subset of the tests.

# Run all e2e tests

./tools/run_tests --dut=vm --filter-expr 'package(e2e_tests)'

# Run e2e tests whose name contains the string 'boot'.

./tools/run_tests --dut=vm --filter-expr 'package(e2e_tests) and test(boot)'

Downstream Product tests

Each downstream product that uses crosvm is performing their own testing, e.g. ChromeOS is running high level testing of its VM features on ChromeOS hardware, while AOSP is running testing of their VM features on AOSP hardware.

Upstream crosvm is not involved in these tests and they are not executed in crosvm CI.

Parallel test execution

Crosvm tests are executed in parallel, each test case in its own process via cargo nextest.

This requires tests to be cautious about global state, especially integration tests which interact with system devices.

If you require exclusive access to a device or file, you have to use file-based locking to prevent access by other test processes.

Platforms tested

The platforms below can all be tested using tools/run_tests -p $platform. The table indicates how

these tests are executed:

| Platform | Build | Unit Tests | Integration Tests | E2E Tests |

|---|---|---|---|---|

| x86_64 (linux) | ✅ | ✅ | ✅ | ✅ |

| aarch64 (linux) | ✅ | ✅ (qemu-user1) | ✅ (qemu2) | ❌ |

| mingw643 (linux) | 🚧 | 🚧 (wine64) | ❌ | ❌ |

| mingw643 (windows) | 🚧 | 🚧 | 🚧 | ❌ |

Crosvm CI will use the same configuration as tools/run_tests.

Debugging Tips

Here are some tips for developing or/and debugging crosvm tests.

Enter a test VM to see logs

When you run a test on a VM with ./tools/run_tests --dut=vm, if the test fails, you'll see

extracted log messages. To see the full messages or monitor the test process during the runtime, you

may want to enter the test VM.

First, enter the VM's shell and start printing the syslog:

$ ./tools/dev_container # Enter the dev_container

$ ./tools/x86vm shell # Enter the test VM

crosvm@testvm-x8664:~$ journalctl -f

# syslog messages will be printed...

Then, open another terminal and run a test:

$ ./tools/run_tests --dut=vm --filter-expr 'package(e2e_tests) and test(boot)'

So you'll see the crosvm log in the first terminal.

qemu-aarch64-static or qemu-arm-static translate instructions into x86 and executes them on the host kernel. This works well for unit tests, but will fail when interacting with platform specific kernel features.

run_tests will launch a VM for testing in the background. This VM is using full system emulation, which causes tests to be slow. Also not all aarch64 features are properly emulated, which prevents us from running e2e tests.

Windows builds of crosvm are a work in progress. Some tests are executed via wine64 on linux

Fuzzing

Crosvm contains several fuzz testing programs that are intended to exercise specific subsets of the code with automatically generated inputs to help uncover bugs that were not found by human-written unit tests.

The source code for the fuzzer target programs can be found in fuzz/fuzz_targets in the crosvm

source tree.

OSS-Fuzz

Crosvm makes use of the OSS-Fuzz service, which automatically builds and runs fuzzers for many open source projects. Once a crosvm change is committed and pushed to the main branch, it will be tested automatically by ClusterFuzz, and if new issues are found, a bug will be filed.

Running fuzzers locally

It can be useful to run a fuzzer in order to test new changes locally or to reproduce a bug filed by ClusterFuzz.

To build and run a specific fuzz target, install cargo fuzz, then run it in the crosvm source

tree, specifying the desired fuzz target to run. If you have a testcase provided by the automated

fuzzing infrastructure in a bug report, you can add that file to the fuzzer command line to

reproduce the same fuzzer execution rather than using randomly generating inputs.

# Run virtqueue_fuzzer with randomly-generated input.

# This will run indefinitely; it can be stopped with Ctrl+C.

cargo +nightly fuzz run virtqueue_fuzzer

# Run virtqueue_fuzzer with a specific input file from ClusterFuzz.

cargo +nightly fuzz run virtqueue_fuzzer clusterfuzz-testcase-minimized-...

Devices

This chapter describes emulated devices in crosvm. These devices work like hardware for the guest.

List of devices

Here is a (non-comprehensive) list of emulated devices provided by crosvm.

Emulated Devices

CMOS/RTC- Used to get the current calendar time.i8042- Used by the guest kernel to exit crosvm.- usb - xhci emulation to provide USB device passthrough.

serial- x86 I/O port driven serial devices that print to stdout and take input from stdin.

VirtIO Devices

balloon- Allows the host to reclaim the guest's memories.block- Basic read/write block device.console- Input and outputs on console.fs- Shares file systems over the FUSE protocol.gpu- Graphics adapter.input- Creates virtual human interface devices such as keyboards.iommu- Emulates an IOMMU device to manage DMA from endpoints in the guest.net- Device to interface the host and guest networks.p9- Shares file systems over the 9P protocol.pmem- Persistent memory.rng- Entropy source used to seed guest OS's entropy pool.scsi- SCSI device.snd- Encodes and decodes audio streams.tpm- Creates a TPM (Trusted Platform Module) device backed by vTPM daemon.video- Allows the guest to leverage the host's video capabilities.wayland- Allows the guest to use the host's Wayland socket.vsock- Enables use of virtual sockets for the guest.vhost-user- VirtIO devices which offloads the device implementation to another process through the vhost-user protocol:- frontend side: Shares its virtqueues.

- backend side: Consumes virtqueues.

Device hotplug (experimental)

A hotplug-capable device can be added as a PCI device to the guest. To enable hotplug, compile

crosvm with feature flag pci-hotplug:

cargo build --features=pci-hotplug #additional parameters

When starting the VM, specify the number of slots with --pci-hotplug-slots option. Additionally,

specify a control socket specified with -s

option for sending hotplug commands.

For example, to run a VM with 3 PCI hotplug slots and control socket:

VM_SOCKET=/run/crosvm.socket

crosvm run \

-s ${VM_SOCKET} \

--pci-hotplug-slots 3

# usual crosvm args

Currently, only network devices are supported.

Block

crosvm supports virtio-block device that works as a disk for the guest.

First, create a ext4 (or whatever file system you want) disk file.

fallocate -l 1G disk.img

mkfs.ext4 disk.img

Then, pass it with --block flag so the disk will be exposed as /dev/vda, /dev/vdb, etc. The

device can be mounted with the mount command.

crosvm run \

--block disk.img

... # usual crosvm args

To expose the block device as a read-only disk, you can add the ro flag after the disk image path:

crosvm run \

--block disk.img,ro

... # usual crosvm args

Rootfs

If you use a block device as guest's rootfs, you can add the root flag to the --block parameter:

crosvm run \

--block disk.img,root

... # usual crosvm args

This flag automatically adds a root=/dev/vdX kernel parameter with the corresponding virtio-block

device name and read-only (ro) or read-write (rw) option depending on whether the ro flag has

also been specified or not.

Options

The --block parameter support additional options to enable features and control disk parameters.

These may be specified as extra comma-separated key=value options appended to the required

filename option. For example:

crosvm run

--block disk.img,ro,sparse=false,o_direct=true,block_size=4096,id=MYSERIALNO

... # usual crosvm args

The available options are documented in the following sections.

Sparse

- Syntax:

sparse=(true|false) - Default:

sparse=true

The sparse option controls whether the disk exposes the thin provisioning discard command. If

sparse is set to true, the VIRTIO_BLK_T_DISCARD request will be available, and it will be

translated to the appropriate system call on the host disk image file (for example,

fallocate(FALLOC_FL_PUNCH_HOLE) for raw disk images on Linux). If sparse is set to false, the

disk will be fully allocated at startup (using fallocate() or equivalent on other platforms),

and the VIRTIO_BLK_T_DISCARD request will not be supported for this device.

O_DIRECT

- Syntax:

o_direct=(true|false) - Default:

o_direct=false

The o_direct option enables the Linux O_DIRECT flag on the underlying disk image, indicating

that I/O should be sent directly to the backing storage device rather than using the host page

cache. This should only be used with raw disk images, not qcow2 or other formats. The block_size

option may need to be adjusted to ensure that I/O is sufficiently aligned for the host block device

and filesystem requirements.

Block size

- Syntax:

block_size=BYTES - Default:

block_size=512

The block_size option overrides the reported block size (also known as sector size) of the

virtio-block device. This should be a power of two larger than or equal to 512.

ID

- Syntax:

id=DISK_ID - Default: No identifier

The id option provides the virtio-block device with a unique identifier. The DISK_ID string must

be 20 or fewer ASCII printable characters. The id may be used by the guest environment to uniquely

identify a specific block device rather than making assumptions about block device names.

The Linux virtio-block driver exposes the disk identifer in a sysfs file named serial; an

example path looks like /sys/devices/pci0000:00/0000:00:02.0/virtio1/block/vda/serial (the PCI

address may differ depending on which other devices are enabled).

Resizing

The crosvm block device supports run-time resizing. This can be accomplished by starting crosvm with

the -s control socket, then using the crosvm disk command to send a resize request:

crosvm disk resize DISK_INDEX NEW_SIZE VM_SOCKET

DISK_INDEX: 0-based index of the block device (counting all--blockin order).NEW_SIZE: desired size of the disk image in bytes.VM_SOCKET: path to the VM control socket specified when running crosvm (-s/--socketoption).

For example:

# Create a 1 GiB disk image

truncate -s 1G disk.img

# Run crosvm with a control socket

crosvm run \

--block disk.img,sparse=false \

-s /tmp/crosvm.sock \

... # other crosvm args

# In another shell, extend the disk image to 2 GiB.

crosvm disk resize \

0 \

$((2 * 1024 * 1024 * 1024)) \

/tmp/crosvm.sock

# The guest OS should recognize the updated size and log a message:

# virtio_blk virtio1: [vda] new size: 4194304 512-byte logical blocks (2.15 GB/2.00 GiB)

The crosvm disk resize command only resizes the block device and its backing disk image. It is the

responsibility of the VM socket user to perform any partition table or filesystem resize operations,

if required.

Input

crosvm supports virtio-input devices that provide human input devices like multi-touch devices, trackpads, keyboards, and mice.

Events may be sent to the input device via a socket carrying virtio_input_event structures. On

Unix-like platforms, this socket must be a UNIX domain socket in stream mode (AF_UNIX/AF_LOCAL,

SOCK_STREAM). Typically this will be created by a separate program that listens and accepts a

connection on this socket and sends the desired events.

On Linux, it is also possible to grab an evdev device and forward its events to the guest.

The general syntax of the input option is as follows:

--input DEVICE-TYPE[KEY=VALUE,KEY=VALUE,...]

For example, to create a 1920x1080 multi-touch device reading data from /tmp/multi-touch-socket:

crosvm run \

...

--input multi-touch[path=/tmp/multi-touch-socket,width=1920,height=1080]

...

The available device types and their specific options are listed below.

Input device types

Evdev

Linux only.

Passes an event device node into the VM. The device will be grabbed (unusable from the host) and made available to the guest with the same configuration it shows on the host.

Options:

path(required): path toevdevdevice, e.g./dev/input/event0

Example:

crosvm run \

--input evdev[path=/dev/input/event0] \

...

Keyboard

Add a keyboard virtio-input device.

Options:

path(required): path to event source socket

Example:

crosvm run \

--input keyboard[path=/tmp/keyboard-socket] \

...

Mouse

Add a mouse virtio-input device.

Options:

path(required): path to event source socket

Example:

crosvm run \

--input mouse[path=/tmp/mouse-socket] \

...

Multi-Touch

Add a multi-touch touchscreen virtio-input device.

Options:

path(required): path to event source socketwidth(optional): width of the touchscreen in pixels (default: 1280)height(optional): height of the touchscreen in pixels (default: 1024)name(optional): device name string

If width and height are not specified, the first multi-touch input device is sized to match the

GPU display size, if specified.

Example:

crosvm run \

...

--input multi-touch[path=/tmp/multi-touch-socket,width=1920,height=1080,name=mytouch2]

...

Rotary

Add a rotating side button/wheel virtio-input device.

Options:

path(required): path to event source socket

Example:

crosvm run \

--input rotary[path=/tmp/rotary-socket] \

...

Single-Touch

Add a single-touch touchscreen virtio-input device.

Options:

path(required): path to event source socketwidth(optional): width of the touchscreen in pixels (default: 1280)height(optional): height of the touchscreen in pixels (default: 1024)name(optional): device name string

If width and height are not specified, the first single-touch input device is sized to match the

GPU display size, if specified.

Example:

crosvm run \

...

--input single-touch[path=/tmp/single-touch-socket,width=1920,height=1080,name=mytouch1]

...

Switches

Add a switches virtio-input device. Switches are often used for accessibility, such as with the Android Switch Access feature.

Options:

path(required): path to event source socket

Example:

crosvm run \

--input switches[path=/tmp/switches-socket] \

...

Trackpad

Add a trackpad virtio-input device.

Options:

path(required): path to event source socketwidth(optional): width of the touchscreen in pixels (default: 1280)height(optional): height of the touchscreen in pixels (default: 1024)name(optional): device name string

Example:

crosvm run \

...

--input trackpad[path=/tmp/trackpad-socket,width=1920,height=1080,name=mytouch1]

...

Custom

Add a custom virtio-input device.

path(required): path to event source socketconfig-path(required): path to file configuring device

crosvm run \

--input custom[path=/tmp/keyboard-socket,config-path=/tmp/custom-keyboard-config.json] \

...

This config_path requires a JSON-formatted configuration file. "events" configures the supported events. "name" defines the customized device name, "serial" defines customized serial name, "properties" defines the device properties and "axis_info" defines the axis information.

An example configuration file covering all property types can be found in

/devices/tests/data/input/example_custom_multitouchscreen_config.json.

It configures a device equivalent to the default multitouch screen device

(crosvm run --input=multi-touch,...). Another example can be found in

/devices/tests/data/input/example_custom_keyboard_config.json,

which configures a device equivalent to the default keyboard (crosvm run --input=keyboard,...).

Here is a portion of the example config file:

{

"name": "Crosvm Virtio Multitouch Touchscreen 0",

"serial_name": "virtio-touchscreen-0",

"properties": {"INPUT_PROP_DIRECT": 1},

"events": [

{

"event_type": "EV_KEY",

"event_type_code": 1,

"supported_events": {

"BTN_TOUCH": 330

}

}, {

...

}

],

"axis_info": [

{

"axis": "ABS_MT_SLOT",

"axis_code": 47,

"min": 0,

"max": 10,

"fuzz": 0,

"flat": 0

}, {

...

}

]

}

Network

Host TAP configuration

The most convenient way to provide a network device to a guest is to setup a persistent TAP interface on the host. This section will explain how to do this for basic IPv4 connectivity.

sudo ip tuntap add mode tap user $USER vnet_hdr crosvm_tap

sudo ip addr add 192.168.10.1/24 dev crosvm_tap

sudo ip link set crosvm_tap up

These commands create a TAP interface named crosvm_tap that is accessible to the current user,

configure the host to use the IP address 192.168.10.1, and bring the interface up.

The next step is to make sure that traffic from/to this interface is properly routed:

sudo sysctl net.ipv4.ip_forward=1

# Network interface used to connect to the internet.

HOST_DEV=$(ip route get 8.8.8.8 | awk -- '{printf $5}')

sudo iptables -t nat -A POSTROUTING -o "${HOST_DEV}" -j MASQUERADE

sudo iptables -A FORWARD -i "${HOST_DEV}" -o crosvm_tap -m state --state RELATED,ESTABLISHED -j ACCEPT

sudo iptables -A FORWARD -i crosvm_tap -o "${HOST_DEV}" -j ACCEPT

Start crosvm with network

The interface is now configured and can be used by crosvm:

crosvm run \

...

--net tap-name=crosvm_tap \

...

Configure network in host

Provided the guest kernel had support for VIRTIO_NET, the network device should be visible and

configurable from the guest.

# Replace with the actual network interface name of the guest

# (use "ip addr" to list the interfaces)

GUEST_DEV=enp0s5

sudo ip addr add 192.168.10.2/24 dev "${GUEST_DEV}"

sudo ip link set "${GUEST_DEV}" up

sudo ip route add default via 192.168.10.1

# "8.8.8.8" is chosen arbitrarily as a default, please replace with your local (or preferred global)

# DNS provider, which should be visible in `/etc/resolv.conf` on the host.

echo "nameserver 8.8.8.8" | sudo tee /etc/resolv.conf

These commands assign IP address 192.168.10.2 to the guest, activate the interface, and route all

network traffic to the host. The last line also ensures DNS will work.

Please refer to your distribution's documentation for instructions on how to make these settings persistent for the host and guest if desired.

Device hotplug (experimental)

On a hotplug-enabled VM, a TAP device can be hotplugged

using the virtio-net command:

crosvm virtio-net add crosvm_tap ${VM_SOCKET}

Upon success, crosvm virtio_net will report the PCI bus number the device is plugged into:

[[time redacted] INFO crosvm] Tap device crosvm_tap plugged to PCI bus 3

The hotplugged device can then be configured inside the guest OS similar to a statically configured

device. (Replace ${GUEST_DEV} with the hotplugged device, e.g.: enp3s0.)

Due to sandboxing, crosvm do not have CAP_NET_ADMIN even if crosvm is started using sudo. Therefore, hotplug only accepts a persistent TAP device owned by the user running crosvm, unless sandboxing is disabled.

The device can be removed from the guest using the PCI bus number:

crosvm virtio-net remove 3 ${VM_SOCKET}

Balloon

crosvm supports virtio-balloon for managing guest memory.

How to control the balloon size

When running a VM, specify VM_SOCKET with -s option. (example: /run/crosvm.sock)

crosvm run \

-s ${CROSVM_SOCKET} \

# usual crosvm args

/path/to/bzImage

Then, open another terminal and specify the balloon size in bytes with crosvm balloon command.

crosvm balloon 4096 ${CROSVM_SOCKET}

Note: The size of balloon is managed in 4096 bytes units. The specified value will be rounded down to a multiple of 4096 bytes.

You can confirm the balloon size with crosvm balloon_stats command.

crosvm balloon_stats ${CROSVM_SOCKET}

SCSI (experimental)

crosvm supports virtio-scsi devices that work as block devices for the guest.

The step for setting up a block device is similar to the virtio-blk device. After setting up the

block device, pass it with --scsi-block flag so the disk will be exposed as /dev/sda,

/dev/sdb, etc. The device can be mounted with the mount command.

crosvm run \

--scsi-block disk.img

... # usual crosvm args

Flags & Options

The --scsi-block parameter supports additional options and flags to enable features and control

disk parameters.

Read-only

To expose the scsi device as a read-only disk, you can add the ro flag after the disk image path:

crosvm run \

--scsi-block disk.img,ro

... # usual crosvm args

Rootfs

If you use a scsi device as guest's rootfs, you can add the root flag to the --scsi-block

parameter:

crosvm run \

--scsi-block disk.img,root

... # usual crosvm args

This flag automatically adds a root=/dev/sdX kernel parameter with the corresponding virtio-scsi

device name and read-only (ro) or read-write (rw) option depending on whether the ro flag has

also been specified or not.

Block size

- Syntax:

block_size=BYTES - Default:

block_size=512

The block_size option overrides the reported block size (also known as sector size) of the

virtio-scsi device. This should be a power of two larger than or equal to 512.

Fs

Crosvm supports

virtio-fs,

a shared file system that lets virtual machines access a directory tree on the host. It allows the

guest to access files on the host machine. This section will explain how to create a shared

directory. You can also find a runnable sample in tools/examples/example_fs.

Creating a Shared Directory on the Host Machine

To create a shared directory, run the following commands in the host machine:

mkdir host_shared_dir

HOST_SHARED_DIR=$(pwd)/host_shared_dir

crosvm run \

--shared-dir "$HOST_SHARED_DIR:my_shared_tag:type=fs" \

... # usual crosvm args

In the --shared-dir argument:

- The first field is the directory to be shared (

$HOST_SHARED_DIRin this example). - The second field is the tag that the VM will use to identify the device (

my_shared_tagin this example). - The remaining fields are key-value pairs configuring the shared directory.

To see available options, run crosvm run --help.

Mount the Shared Directory in the Guest OS

Next, switch to the guest OS and run the following commands to set up the shared directory:

sudo su

mkdir /tmp/guest_shared_dir

mount -t virtiofs my_shared_tag /tmp/guest_shared_dir

You can now add files to the shared directory. Any files you put in the guest_shared_dir will

appear in the host_shared_dir on the host machine, and vice versa.

Running VirtioFS as root filesystem

It is also possible to boot crosvm directly from a virtio-fs directory, as long as the directory structure matches that of a valid rootfs. The outcome is similar to running a chroot but inside a VM.

Running VMs with virtio-fs as root filesystem may not be ideal as performance will not be as good as running a root disk with virtio-block, but it can be useful to run tests and debug while sharing files between host and guest.

You can refer to the advanced usage page for the instructions on how to run virtio-fs as rootfs.

Vsock device

crosvm supports virtio-vsock device for communication between the host and a guest VM.

Assign a context id to a guest VM by passing it with the --vsock flag.

GUEST_CID=3

crosvm run \

--vsock "${GUEST_CID}" \

<usual crosvm arguments>

/path/to/bzImage

Then, the guest and the host can communicate with each other via vsock. Host always has 2 as its context id.

crosvm assumes that the host has a vsock device at /dev/vhost-vsock. If you want to use a device

at a different path or one given as an fd, you can use --vhost-vsock-device flag or

--vhost-vsock-fd flag respectively.

Example usage

This example assumes ncat is installed. If you are using a VM image created using virt-builder,

it needs to come pre-installed with ncat. This can be achieved by running the following command:

# Build a simple ubuntu image and create a user with no password.

virt-builder ubuntu-20.04 \

--run-command "useradd -m -g sudo -p '' $USER ; chage -d 0 $USER" \

-o ./rootfs \

--install ncat

At host shell:

PORT=11111

# Listen at host

ncat -l --vsock ${PORT}

At guest shell:

HOST_CID=2

PORT=11111

# Make a connection to the host

ncat --vsock ${HOST_CID} ${PORT}

If a vsock device is configured properly in the guest VM, a connection between the host and the guest can be established and packets can be sent from both side. In the above example, your inputs to a shell on one's side should be shown at the shell on the other side if a connection is successfully established.

Pmem

This section contains the following sub pages:

- VirtIO Pmem describes the basic usage of virtio-pmem device to provide a disk device with the guest.

- Sharing host directory via virtio-pmem describes crosvm's virtual ext2 feature on virtio-pmem, which allow sharing a host directory with the guest as read-only.

VirtIO Pmem

crosvm supports virtio-pmem to provide a virtual device emulating a byte-addressable persistent

memory device. The disk image is provided to the guest using a memory-mapped view of the image file,

and this mapping can be directly mapped into the guest's address space if the guest operating system

and filesystem support DAX.

Pmem devices may be added to crosvm using the --pmem flag, specifying the filename of the backing

image as the parameter. By default, the pmem device will be writable; add ro=true to create a

read-only pmem device instead.

crosvm run \

--pmem disk.img \

... # usual crosvm args

The Linux virtio-pmem driver can be enabled with the CONFIG_VIRTIO_PMEM option. It will expose

pmem devices as /dev/pmem0, /dev/pmem1, etc., which may be mounted like any other block device.

A pmem device may also be used as the root filesystem by adding root=true to the --pmem flag:

crosvm run \

--pmem rootfs.img,root=true,ro=true \

... # usual crosvm args

The advantage of pmem over a regular block device is the potential for less cache duplication; since

the guest can directly map pages of the pmem device, it does not need to perform an extra copy into

the guest page cache. This can result in lower memory overhead versus virtio-block (when not using

O_DIRECT).

The file backing a persistent memory device is mapped directly into the guest's address space, which

means that only the raw disk image format is supported; disk images in qcow2 or other formats may

not be used as a pmem device. See the block device for an alternative that supports

more file formats.

Sharing host directory with virtio-pmem

crosvm has an experimental feature to share a host directory with the guest as read-only via virtio-pmem device.

How it works

When this feature is enabled, crosvm creates a virtual ext2 filesystem in memory. This filesystem

contains the contents of the specified host directory. When creating the file system, crosvm do

mmap each file instead of data copy. As a result, the actual file data is read from disk only when

it's accessed by the guest.

Usage

To share a host directory with the guest, you'll need to start crosvm with the device enabled, and

mount the device in the guest.

Host

You can use --pmem-ext2 flag to enable the device.

$ mkdir host_shared_dir

$ HOST_SHARED_DIR=$(pwd)/host_shared_dir

$ echo "Hello!" > $HOST_SHARED_DIR/test.txt

$ crosvm run \

--pmem-ext2 "$HOST_SHARED_DIR" \

# usual crosvm args

You can check a full list of parameters for --pmem-ext2 with crosvm run --help.

Guest

Then, you can mount the ext2 file system from the guest. With -o dax, we can avoid duplicated page

caches between the guest and the host.

$ mkdir /tmp/shared

$ mount -t ext2 -o dax /dev/pmem0 /tmp/shared

$ ls /tmp/shared

lost+found test.txt

$ cat /tmp/shared/test.txt

Hello!

Comparison with other methods

Since access to files provided by this device is through pmem, it is done as a host OS page fault. This can reduce the number of context switches to the host userspace compared to virtio-blk or virtio-fs.

This feature is similar to the VVFAT (Virtual FAT filesystem) device in QEMU, but our pmem-ext2 uses the ext2 filesystem and supports read-only accesses only.

USB

crosvm supports attaching USB devices from the host by emulating an xhci backend.

Unlike some other VM software like qemu, crosvm does not support attaching USB devices at boot time, however we can tell the VM to attach the devices once the kernel has booted, as long as we started crosvm with a control socket (see the control socket section in advanced usage).

First, start crosvm making sure to specify the control socket:

$ crosvm run -s /run/crosvm.sock ${USUAL_CROSVM_ARGS}

Then, you need to identify which device you want to attach by looking for its USB bus and device number:

$ lsusb

Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 002 Device 022: ID 18d1:4ee7 Google Inc. Pixel 5

Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Assuming in this example the device you want is the Google Inc. Pixel 5, its bus and port numbers

are 002 and 022 respectively.

There should be a USB device file on the host at the path /dev/bus/usb/002/022 which is what you

want to pass to the crosvm usb attach command:

# crosvm usb attach 00:00:00:00 /dev/bus/usb/002/022 /run/crosvm.sock

You can run this command as root or make sure your current user has permissions to access the device file. Also make sure the device is not currently attached to any other drivers on the host and is not already in use.

NOTE: You need to pass some string formatted like 00:00:00:00 as the first parameter to the

usb attach command. This is a deprecated argument and is not used by crosvm, but we need to

include it anyway for it to work. It will be removed in the future.

On the host you should see a message like:

ok 9

Which tells you the operation succeeded and which port number the USB device is attached to (in this

case 9).

Inside the VM you should see dmesg messages that the USB device has been attached successfully and you should be able to use it as normal.

If you want to detach the device, simply issue a detach command to the same number as the port returned by the attach command:

# crosvm usb detach 9 /run/crosvm.sock

Which should return another ok 9 confirmation.

Keep in mind that when a USB device is attached to a VM, it is in exclusive mode and cannot be used by the host or attached to other VMs.

Wayland

If you have a Wayland compositor running on your host, it is possible to display and control guest applications from it. This requires:

- A guest kernel version 5.16 or above with

CONFIG_DRM_VIRTIO_GPUenabled, - The

sommelierWayland proxy in your guest image.

This section will walk you through the steps needed to get this to work.

Guest kernel requirements

Wayland support on crosvm relies on virtio-gpu contexts, which have been introduced in Linux 5.16.

Make sure your guest kernel is either this version or a more recent one, and that

CONFIG_DRM_VIRTIO_GPU is enabled in your kernel configuration.

Crosvm requirements

Wayland forwarding requires the GPU feature and the virtio-gpu cross domain mode to be enabled.

cargo build --features "gpu"

Building sommelier

Sommelier is a proxy Wayland compositor that forwards the Wayland protocol from a guest to a compositor running on the host through the guest GPU device. As it is not a standard tool, we will have to build it by ourselves. It is recommended to do this from the guest with networking enabled.

Clone ChromeOS' platform2 repository, which contains the source for sommelier:

git clone https://chromium.googlesource.com/chromiumos/platform2

Go into the sommelier directory and prepare for building:

cd platform2/vm_tools/sommelier/

meson setup build -Dwith_tests=false

This setup step will check for all libraries required to build sommelier. If some are missing,

install them using your guest's distro package manager and re-run meson setup until it passes.

Finally, build sommelier and install it:

meson compile -C build

sudo meson install -C build

This last step will put the sommelier binary into /usr/local/bin.

Running guest Wayland apps

Crosvm can connect to a running Wayland server (e.g. weston) on the host and forward the protocol

from all Wayland guest applications to it. To enable this you need to know the socket of the Wayland

server running on your host - typically it would be $XDG_RUNTIME_DIR/wayland-0.

Once you have confirmed the socket, create a GPU device and enable forwarding by adding the

--gpu=context-types=cross-domain --wayland-sock $XDG_RUNTIME_DIR/wayland-0 arguments to your

crosvm command-line. Other context types may be also enabled for those interested in 3D

acceleration.

You can now run Wayland clients through sommelier, e.g:

sommelier --virtgpu-channel weston-terminal

Or

sommelier --virtgpu-channel gedit

Applications started that way should appear on and be controllable from the Wayland server running on your host.

The --virtgpu-channel option is currently necessary for sommelier to work with the setup of this

document, but will likely not be required in the future.

If you have Xwayland installed in the guest you can also run X applications:

sommelier -X --xwayland-path=/usr/bin/Xwayland xeyes

Video (experimental)

The virtio video decoder and encoder devices allow a guest to leverage the host's hardware-accelerated video decoding and encoding capabilities. The specification (v3, v5) for these devices is still a work-in-progress, so testing them requires an out-of-tree kernel driver on the guest.

The virtio-video host device uses backends to perform the actual decoding. The currently supported backends are:

libvda, a hardware-accelerated backend that supports both decoding and encoding by delegating the work to a running instance of Chrome. It can only be built and used in a ChromeOS environment.ffmpeg, a software-based backend that supports encoding and decoding. It exists to make testing and development of virtio-video easier, as it does not require any particular hardware and is based on a reliable codec library.

The rest of this document will solely focus on the ffmpeg backend. More accelerated backends will

be added in the future.

Guest kernel requirements

The virtio_video branch of this kernel git repository contains

a work-in-progress version of the virtio-video guest kernel driver, based on a (hopefully) recent

version of mainline Linux. If you use this as your guest kernel, the virtio_video_defconfig

configuration should allow you to easily boot from crosvm, with the video (and a few other) virtio

devices support built-in.

Quick building guide after checking out this branch:

mkdir build_crosvm_x86

make O=build_crosvm_x86 virtio_video_defconfig

make O=build_crosvm_x86 -j16

The resulting kernel image that can be passed to crosvm will be in

build_crosvm_x86/arch/x86/boot/bzImage.

Crosvm requirements

The virtio-video support is experimental and needs to be opted-in through the "video-decoder" or

"video-encoder" Cargo feature. In the instruction below we'll be using the FFmpeg backend which

requires the "ffmpeg" feature to be enabled as well.

The following example builds crosvm with FFmpeg encoder and decoder backend support:

cargo build --features "video-encoder,video-decoder,ffmpeg"

To enable the decoder device, start crosvm with the --video-decoder=ffmpeg command-line

argument:

crosvm run --disable-sandbox --video-decoder=ffmpeg -c 4 -m 2048 --block /path/to/disk.img,root --serial type=stdout,hardware=virtio-console,console=true,stdin=true /path/to/bzImage

Alternatively, to enable the encoder device, start crosvm with the --video-encoder=ffmpeg

command-line argument:

crosvm run --disable-sandbox --video-encoder=ffmpeg -c 4 -m 2048 --block /path/to/disk.img,root --serial type=stdout,hardware=virtio-console,console=true,stdin=true /path/to/bzImage

If the guest kernel includes the virtio-video driver, then the device should be probed and show up.

Testing the device from the guest

Video capabilities are exposed to the guest using V4L2. The encoder or decoder device should appear

as /dev/videoX, probably /dev/video0 if there are no additional V4L2 devices.

Checking capabilities and formats

v4l2-ctl, part of the v4l-utils package, can be used to test the device's existence.

Example output for the decoder is shown below.

v4l2-ctl -d/dev/video0 --info

Driver Info:

Driver name : virtio-video

Card type : ffmpeg

Bus info : virtio:stateful-decoder

Driver version : 5.17.0

Capabilities : 0x84204000

Video Memory-to-Memory Multiplanar

Streaming

Extended Pix Format

Device Capabilities

Device Caps : 0x04204000

Video Memory-to-Memory Multiplanar

Streaming

Extended Pix Format

Note that the Card type is ffmpeg, indicating that decoding will be performed in software on the

host. We can then query the support input (OUTPUT in V4L2-speak) formats, i.e. the encoded formats

we can send to the decoder:

v4l2-ctl -d/dev/video0 --list-formats-out

ioctl: VIDIOC_ENUM_FMT

Type: Video Output Multiplanar

[0]: 'VP90' (VP9, compressed)

[1]: 'VP80' (VP8, compressed)

[2]: 'HEVC' (HEVC, compressed)

[3]: 'H264' (H.264, compressed)

Similarly, you can check the supported output (or CAPTURE) pixel formats for decoded frames:

v4l2-ctl -d/dev/video0 --list-formats

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture Multiplanar

[0]: 'NV12' (Y/CbCr 4:2:0)

Test decoding with ffmpeg

FFmpeg can be used to decode video streams with the virtio-video device.

Simple VP8 stream:

wget https://github.com/chromium/chromium/raw/main/media/test/data/test-25fps.vp8

ffmpeg -codec:v vp8_v4l2m2m -i test-25fps.vp8 test-25fps-%d.png

This should create 250 PNG files each containing a decoded frame from the stream.

WEBM VP9 stream:

wget https://test-videos.co.uk/vids/bigbuckbunny/webm/vp9/720/Big_Buck_Bunny_720_10s_1MB.webm

ffmpeg -codec:v vp9_v4l2m2m -i Big_Buck_Bunny_720_10s_1MB.webm Big_Buck_Bunny-%d.png

Should create 300 PNG files at 720p resolution.

Test decoding with v4l2r

The v4l2r Rust crate also features an example program that can use this driver to decode simple H.264 streams:

git clone https://github.com/Gnurou/v4l2r

cd v4l2r

wget https://github.com/chromium/chromium/raw/main/media/test/data/test-25fps.h264

cargo run --example simple_decoder test-25fps.h264 /dev/video0 --input_format h264 --save test-25fps.nv12

This will decode test-25fps.h264 and write the raw decoded frames in NV12 format into

test-25fps.nv12. You can check the result with e.g. YUView.

Test encoding with ffmpeg

FFmpeg can be used to encode video streams with the virtio-video device.

The following examples generates a test clip through libavfilter and encode it using the virtual H.264, H.265 and VP8 encoder, respectively. (VP9 v4l2m2m support is missing in FFmpeg for some reason.)

# H264

ffmpeg -f lavfi -i smptebars=duration=10:size=640x480:rate=30 \

-pix_fmt nv12 -c:v h264_v4l2m2m smptebars.h264.mp4

# H265

ffmpeg -f lavfi -i smptebars=duration=10:size=640x480:rate=30 \

-pix_fmt yuv420p -c:v hevc_v4l2m2m smptebars.h265.mp4

# VP8

ffmpeg -f lavfi -i smptebars=duration=10:size=640x480:rate=30 \

-pix_fmt yuv420p -c:v vp8_v4l2m2m smptebars.vp8.webm

Virtual U2F Passthrough

crosvm supports sharing a single u2f USB

device between the host and the guest. Unlike with normal USB devices which require to be

exclusively attached to one VM, it is possible to share a single security key between multiple VMs

and the host in a non-exclusive manner using the attach_key command.

A generic hardware security key that supports the fido1/u2f protocol should appear as a

/dev/hidraw interface on the host, like this:

$ lsusb

Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 003 Device 018: ID 1050:0407 Yubico.com YubiKey OTP+FIDO+CCID

Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

$ ls /dev/hidraw*

/dev/hidraw0 /dev/hidraw1

In this example, the physical YubiKey presents both a keyboard interface (/dev/hidraw0) and a

u2f-hid interface (/dev/hidraw1). Crosvm supports passing the /dev/hidraw1 interface to the

guest via the crosvm usb attach_key command.

First, start crosvm making sure to specify a control socket:

$ crosvm run -s /run/crosvm.sock ${USUAL_CROSVM_ARGS}

Since the virtual u2f device is surfaced as a generic HID device, make sure your guest kernel is built with support for HID devices. Specifically it needs CONFIG_HID, CONFIG_HIDRAW, CONFIG_HID_GENERIC, and CONFIG_USB_HID enabled.

Once the VM is launched, attach the security key with the following command on the host:

$ crosvm usb attach_key /dev/hidraw1 /run/crosvm.sock

ok 1

The virtual security key will show up inside the guest as a Google USB device with Product and

Vendor IDs as 18d1:f1d0:

$ lsusb

Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 002: ID 18d1:f1d0 Google Inc.

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

You can verify that the correct hidraw device has been created in the /dev/ tree:

$ ls /dev/hidraw*

/dev/hidraw0

The device should now be usable as u2f-supported security key both inside the guest and on the host. It can also be attached to other crosvm instances at the same time too.

Vhost-user devices

Crosvm supports vhost-user devices for most virtio devices (block, net, etc ) so that device emulation can be done outside of the main vmm process.

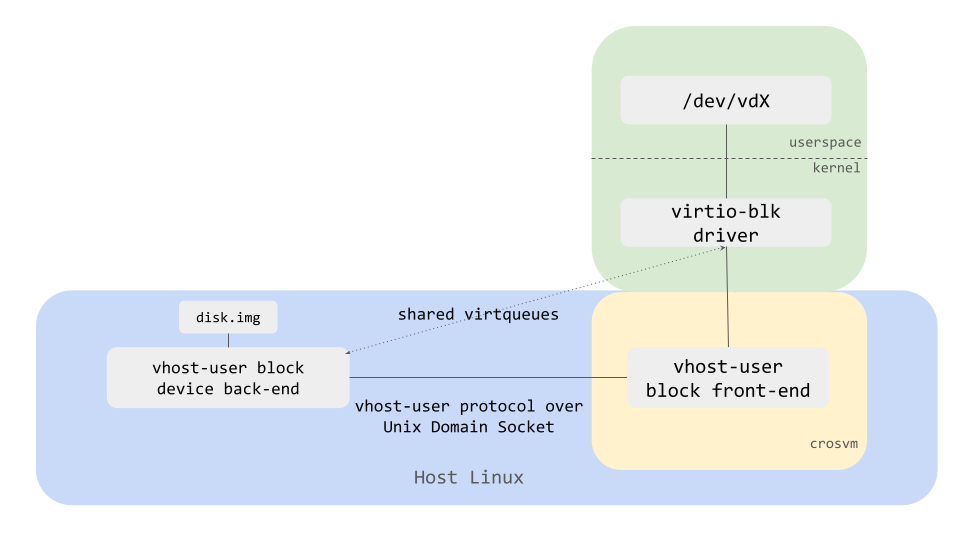

Here is a diagram showing how vhost-user block device back-end (implementing the actual disk in userspace) and a vhost-user block front-end (implementing the device facing the guest OS) in crosvm VMM work together.

How to run

Let's take a block device as an example and see how to start vhost-user devices.

First, start vhost-user block backend with crosvm devices command, which waits for a vmm process

connecting to the socket.

# One-time commands to create a disk image.

fallocate -l 1G disk.img

mkfs.ext4 disk.img

VHOST_USER_SOCK=/tmp/vhost-user.socket

# Start vhost-user block backend listening on $VHOST_USER_SOCK

crosvm devices --block vhost=${VHOST_USER_SOCK},path=disk.img

Then, open another terminal and start a vmm process with --vhost-user flag (the frontend).

crosvm run \

--vhost-user block,socket="${VHOST_USER_SOCK}" \

<usual crosvm arguments>

/path/to/bzImage

As a result, disk.img should be exposed as /dev/vda just like with --block disk.img.

Tracing

Crosvm supports tracing to allow developers to debug and diagnose problems and check performance optimizations.

The crate cros_tracing is used as a frontend for trace points across the crosvm codebase. It is

disabled by default but we can enable it with a compile-time flag. It is written to be extensible

and support multiple backends.

The currently supported backends are:

noop: No tracing is enabled. All trace points are compiled out of the application so there is no performance degradation. This is the default backend when no tracing flag is provided.trace_marker: ftrace backend to log trace events to the Linux kernel. Only supported on Linux systems. Enabled by compiling crosvm with the--features trace_markerflag. (On CrOS it is USE flagcrosvm-trace-marker)

cros_tracing Overview

The cros_tracing API consists of the following: